Introduction

The need to remove pathogens from potable water supplies is long recognised. Pathogenic contamination of water cause disease outbreaks and contribute to background disease rates around the world, and most seriously impacting the developing world.In this article we outline the characteristics of pathogen groups and provide an overview of the approaches used to remove or otherwise deactivate pathogens from water, summarising the effectiveness of these approaches and highlighting their significant drawbacks. We focus in particular on the effectiveness of improved modern technologies for pathogen removal, including membrane filtration, now being adopted for multiple reasons worldwide. We examine the effectiveness of filtration removal of pathogens from wastewater and for supplying potable water.

Pathogens: sources and characteristics

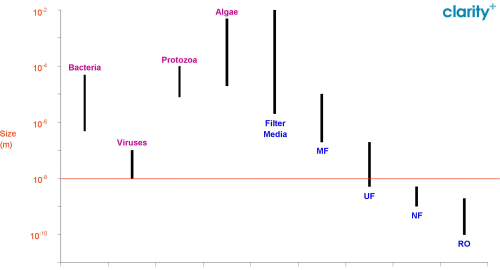

Pathogens, microscopic biological organisms capable of causing disease, include viruses (comprising DNA or RNA with a protein coating), bacteria (single-celled organisms), protozoa (also single-celled, but with a distinct membrane-bound nucleus) and toxins released by algae (aquatic photosynthetic uni-cellular or multi-cellular species).

The harmful effects from pathogens range from mild acute illness, through chronic severe sickness to fatality. Important waterborne (transmission via consumption of contaminated water), water-washed (where the quality of used cleansing water is of lesser consideration itself acts as a pathogen source) and water-based (the pathogen or an intermediate host spends part of its life-cycle in water) diseases kill millions annually. The World Health Organisation (WHO) states 2.16 million people died of diarrheal diseases globally in 2004, more than 80% of which were from low-income countries. Cholera, giardiasis, infectious hepatitis, typhoid, amoebic and bacillary dysentries and bilharzia are some of the more common diseases responsible.

By far the most common transmission route is the oral consumption route of pathogens, derived from human faeces or urine residing in contaminated water, including cleansing/washing water. Although many pathogens can live for only a short time outside the human body, waterborne transmission of resilient bacterial cysts and oocysts, together with direct pathogen transport, is a key infection mechanism. Animal faeces and urine also harbor important pathogenic species (e.g. leptospirosis) whilst further risks, particularly to livestock, are derived from the excretion of toxins to water by algae and other microbes (e.g. cyanobacteria). In recent years, potential pathogen contamination from increased land-spread sewage sludge has required greater attention to sludge treatment to alleviate the risk.

A range of water treatment approaches can improve the safety of potable water with regard to pathogenic contamination. Treatment efficacy is widely measured using the log removal value (LRV):

LRV=log10(Cin/Cout)

Where Cin is influent pathogen concentration, and Cout is effluent pathogen concentration.

Hence, for a given pathogen, LRV 2 reflects 99% removal, whilst LRV 4 reflects 99.99% removal.

The importance of effective pathogen treatment, particularly for certain indicator pathogens with a low infective dose, is reflected in the high quality standards set in the industrialised world. The UK Water Supply (Water Quality) Regulations (2000), itself a national implementation of the European Drinking Water Directive, requires zero concentration of E. Coli and enterococci and no abnormal changes in microbial culturable colonies in water from users taps, whilst US National Primary Drinking Water Regulations adopt zero maximum concentration levels for Cryptosporidium, Giardia lamblia and total coliforms.

Approaches to pathogen treatment

Pathogen treatment is approached in two ways: removal processes and/or inactivation (disinfection) processes. These processes ideally form part of an over-arching “multiple-barrier” treatment strategy that ensures water source protection (using water of the highest initial quality possible), followed by appropriate pathogen removal, subsequent disinfection, and final contamination protection strategies for the water distribution system.

Removal processes utilise long-standing technologies and more modern treatments. Conventionally, pre-treatment via coarse filters (e.g. gravel, sand) or other means reduces gross turbidity (pathogen populations are typically high on particles), being especially effective in reducing algae and protozoa concentrations (LRV 2–3 is readily achievable). Simple settlement in storage reservoirs or through bank filtration can also provide primary pathogen removal. Storage not only permits settlement, but also allows time for bacterial and virus death outside its host environment. However, such simple treatment systems are rarely sufficient in themselves to meet the high pathogen removal standards required for effective health protection.

Pre-treatment is often supplemented by enhanced clarification treatment by means of flocculation or coagulation and subsequent sedimentation. Optimised systems can achieve LRV 1 to 2 for viruses, bacteria and protozoa. However, experience around the world has shown that maintaining optimised conditions is difficult, and this can lead to highly variable pathogen removal efficiencies. For example, effective coagulation relies on accurate dosing and mixing of often highly variable influent loads and effective, well-controlled sludge removal. Furthermore, virus removal can vary significantly with species, and the degree of variation (by as much as LRV 2) is also influenced by coagulant type. Higher LRV's for major pathogen groups are generally achieved using high-rate clarifiers, although due care is required in problem areas with algae removal, so not to disrupt algal cells and allow toxin release. Dissolved air flotation is a suitable alternative for algae removal (LRV 1 to 2 for many species), and is also an effective approach for Cryptosporidium oocyst removal (LRV 2 to 2.6). Pathogen removal via filtration is discussed in detail below.

The disinfection (inactivation) of pathogens is the second important approach. In outline, oxidative, heat or UV treatments are used; oxidation reacts with the organic structure of the pathogen, heat kills pathogens by exceeding thermal tolerances, whilst UV disrupts the cell genetic material through a mechanism that ultimately restricts replication.

Oxidative disinfection effectiveness is species specific and generally follows a contact time-dose relationship under fixed conditions. Many oxidants are chlorine-based (e.g. chlorine gas, monochloramine, sodium hypochlorite, chlorine dioxide) whilst ozone is one of several alternatives. Although detailed discussion is beyond the scope of this article, disinfection efficiencies (usually expressed as required time-dose products to affect a given LRV) can vary significantly between oxidants and are influenced by water turbidity, pH and temperature. Additional considerations for operators choosing suitable oxidants include the resistance of plant and the safety of staff exposed to these hazardous corrosive substances, the quantities required and the associated storage and stability characteristics of the oxidants.

Thus far, the discussion has focused on drinking water requirements where very high pathogen treatment efficiencies are necessary. In other circumstances, lower-grade pathogen removal may be sufficient, for example where the goal is to protect sensitive environments from pathogen contamination, relying on subsequent environmental self-purification to complete the process. For example, where effluent is to be discharged to areas designated by the European Bathing Waters Directive (2006/07/EC) and classification is dependent upon measured environmental E. Coli and enterococci concentrations, wastewater treatment operators may be required to limit pathogen discharge to surface waters. Requirements may differ between releases to inland or to coastal water. Similarly, the new codified Shellfish (growing) Waters Directive (2006/113/EC) sets guidelines for acceptable coliform concentrations in shellfish harvested within designated areas. Elsewhere, the USEPA has updated guidelines for the Enhanced Surface Water Treatment rules, a key objective being the protection of groundwater supplies from pathogenic contamination from percolating surface waters. In these instances, operators need to evaluate their treatment systems with regard to pathogen removal efficiency should their influent be contaminated.

Improved pathogen removal technologies

Gravel and slow sand filtration may be the only cost-effective technologies to achieve pathogen removal in some circumstances. Depending upon flow rates, media size and uniformity and filter bed depth, these systems can be very effective (Figure 1). Wide-ranging LRV's of up to 5 have been reported in tests, whilst operational experience in the U.S.A. demonstrates total coliform LRV up to 2.3 and very effective Giardia removal (LRV ca. 4) by sand filters, particularly after the establishment of microbiological films on the media. However, treatment capacities can be low and pathogen removal efficiencies can be highly variable; Cryptosporidium removal in particular has generally been shown to be relatively poor (LRV often < 0.5).

Low-cost treatment systems are capable of effective pathogen removal if correctly constructed. Studies at several small treatment plants in Spain and the Canary Islands (Improving Coastal & Recreational Waters project, ICREW) demonstrate such plants have the capability to comply with the microbiological requirements of Bathing Waters and the Urban Wastewater (71/271/EEC) Directives using various conventional (e.g. extended aeration, rotating biological contactor, trickling filter) and non-conventional (stabilisation ponds, peat filters, anaerobic ponds plus trickling filter) technologies.

In the USA, an estimated 25% of the population uses some form of on-site water treatment system, but pathogenic contamination can be problematic. Conventional on-site treatment systems generally work by gravity displacement with plug-flow movement through the treatment system. Problems may arise under peak flow conditions resulting in poor treatment efficiency, potentially exacerbated by tank or pipework integrity failure especially where sited upon an unconfined aquifer. Improved on-site treatment technologies incorporate time-dosing systems stabilising hydraulic retention time then employing a range of filter media (e.g. sand, peat, textile). Where small-scale advanced treatment systems have been installed, small UV disinfection units are also available to achieve high quality treatment.

Membrane filtration has become widespread both in new-build water treatment plants and retro-fitted to existing plant. Membrane filtration has grown in prominence as a viable wastewater treatment approach over the last decade as technology developments have improved membrane ruggedness, system reliability and cost-effectiveness. In many cases, the primary objective of a membrane filtration system is to remove suspended solids and chemical oxygen demand (COD) to meet stringent discharge consents. The size exclusion capability of microfiltration (MF) and ultrafiltration (UF) membranes shows the potential for concurrent pathogen removal (Figure 2).

MF removes algae, protozoa and many bacteria effectively (e.g. LRV between 4 and 7 for Giardia and Cryptosporidium using a 0.1 μm membrane), particularly under test conditions, but generally performs less well under operational conditions unless the membranes are efficiently cleaned of bacterial growth. Virus removal by MF is poor, although can be better than anticipated from simple pore-size considerations if virus species are strongly associated with particles. Accordingly, for effective virus removal UF is normally required. Virus removal by UF is more efficient with lower molecular weight cut-off membranes, as the key removal mechanism is physical exclusion (the influence of microfilm composition is secondary). Importantly, pathogen removal efficiency is independent of influent quality and other operating parameters, readily being in the range LRV 4 to 7 for the key pathogens.

Membrane filtration using UF can effectively remove pathogens to the very high degree achieved by chemical oxidative disinfection, and is without the associated problems and costs of storing and using corrosive agents. However, significant problems arise should membrane integrity fail (fibres tear, membrane is scratched, etc.) as pathogen removal efficiency can dramatically deteriorate. The development of robust membrane materials overcomes this issue, but effective effluent monitoring to identify integrity problems remains an essential component of a MF/UF treatment system. Direct (e.g. pressure testing) or indirect (e.g. particle monitoring) integrity testing approaches have been successfully used. For potable water supply, post-membrane disinfection remains necessary to achieve secondary disinfection.

In high-quality supply applications, nanofiltration (NF) or reverse osmosis (RO) together with UV disinfection is well established. Normally, MF/UF pretreatment is used, with oxidative disinfection commonly employed to restrict biofilm establishment on the NF/RO membrane. UV-B or UV-C light is best for pathogen inactivation (200-310 nm) with doses of around 30 mWscm-2 suitable for all but the most resilient of viruses.

Conclusions

The treatment of water for pathogens utilises pathogen removal and inactivation processes. A range of technologies, many well-established, can achieve an overall pathogen removal strategy that is fit-for-purpose. For drinking water applications the highest removal efficiencies are sought generally using a multiple-barrier approach. Simple media filtration can form a successful part of this strategy if properly implemented and maintained, although modern UF or RO technologies offer a robust alternative; inactivation is still required to establish and maintain disinfection. For more general wastewater discharge applications, MF or UF are viable approaches to effective pathogen removal concurrent with other water treatment objectives.